May 30, 2004

It's begun

The good news of the day is that someone has beaten us to it and published a re-mixable film format.

May 28, 2004

Michela and her bucking flog

G'day folks. Time for another update.

It is with considerable pleasure and no small amount of relief that I can announce finally that the deal for year one funding of MOD Films has been concluded. I've got a signed contract back from NESTA and a seven stage project to deliver cinema, console demo and community dimensions of SANCTUARY is about to get underway.

More details on the plan as evolved from the proposal will be unveiled soon. It's crunch-time for a lot of decisions and I'm doing my best to get the balance right.

In response to your feedback, we are going to make a short video which can act as a more independent induction tool for new members of the project and help communicate the overall idea. I'll be soliciting feedback on this shortly.

We aim to shoot the film sometime between December 2004 and March 2005 and go into production of a PC-based re-mixable film demo immediately afterwards.

To get us to that is going to require a huge amount of effort, world-class creative and business leads, no shortage of good karma, and considerable good will. Hope you're up for it.

Talk to you soon.

.M.

PS. Anyone lurking who feels they should no longer be receiving this due to conflicts of interest, etc... now would be a good time to let me know and exit gracefully.

Jolt - UK MOD community

First contact with Dom at http://www.jolt.co.uk was promising. Jolt host 10-12 MODs including the current top rated MOD on moddb.com (Atlantis). They have already produced three commercial MODs for game publishers (ballpark development budgets £15K). An excellent contact.

Dom thought the idea was innovative but rested on the appeal of the film. He emphasised the importance of an FPS (first-person-shooter) MOD for any game community but agreed that this could feasibly materialise without direct funding.

Ultimately, the best trigger for external MOD activity will be the publishing of the RIG API.

Get up and move

http://www.getupmove.com/. Thanks to Matt for this one.

May 26, 2004

Concept art for Matrix sequels

CGNetworks - Concept Art Production for The Matrix Reloaded/Revolutions looks at the concept design and graphic story telling approach used on those films.

May 14, 2004

Immersion Studios

I ran into Malcolm Garrett this week who is working at Immersion Studios in Toronto.

Interestingly enough, they do Immersion Classrooms, Simulations, Immersion Cinema and other forms of real-time film-making.

May 13, 2004

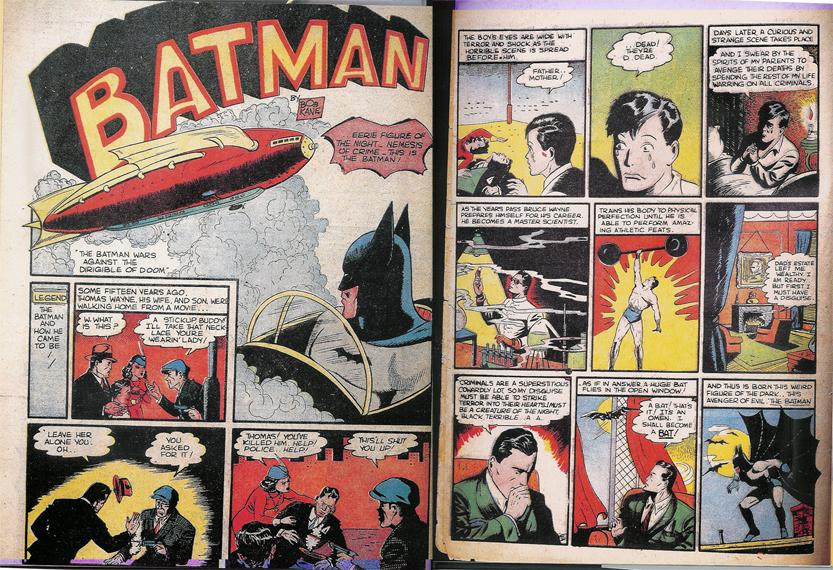

BATMAN origin story

The original 12-cell origin story for Batman, a classic superhero

May 12, 2004

Messageboard systems

Messageboard systems for evaluation:

Invisionboard (MoveableType)

VBulletin (VJforumsl)

PHPnuke

Ikonboard

phpBB (Arkaos)

May 11, 2004

Film licensing companies

http://www.mplc.com/

http://www.swank.com/http://www.movlic.com/

http://www.filmbank.co.uk/

http://www.utsystem.edu/ogc/intellectualproperty/permissn.htm

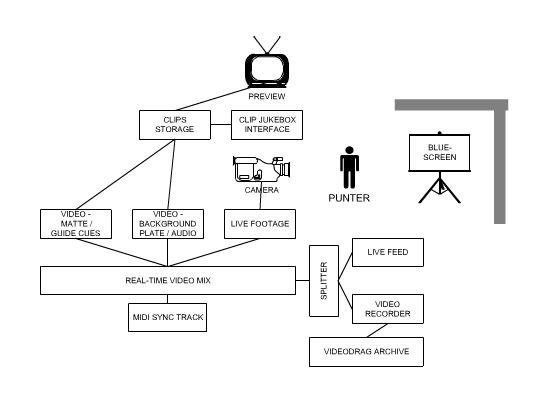

Videodrag proof-of-concept

It was live, and giggle-worthy, which may be a good thing... first cut of videodrag is in.

Notes:

USER EXPERIENCE

Choose a clip from jukebox

Stand in front of a bluescreen and be filmed miming along to clip

Watch preview for action, hard-of hearing subtitles, and guide cues (for Close up, Mid shot, Wide shot)

Move to marker A, B, C respectively depending on the cue

Pay to have clip sent to you

POST PRODUCTION PROCESS

Top and tail clip (longer the better) taking audio into consideration

Decide placement of punter as if they were one character (i.e. there may be more than one placement possibility per clip in which case go through whole process separately and add a number to clip name)

Create matte to separate foreground from background (optional but desireable)

Determine guide cues (based on particular clip context)

Record description of context and list of cues (e.g. C\t00:00:02:23:40 means close-up at 2mins:23)

Prepare cues using video compositor (or custom tool)

Export video as two files (

ISSUES) Video dimensions/performance trade-off.

ISSUES) Choice of codec- PhotoJPEG/MPEG4/DV-PAL

ISSUE) Guide cues hard-coded. Can they be stored/generated separately so that lead-in timings can be altered according to difficulty setting?

ARKAOS NOTES

Create new synth (one per movie)

Create new patch (one per clip)

Map the alpha matte to C4 (latch, set layer priority to Always in front)

Map the background plate to D#4 (latch, set layer priority to Always in background)

Map the live camera to C#4 (adjust mask settings to key out background)

Import and rename videodrag_trigger.mid to clip name

Test clip using close-up, mid shot and wide-angle biped animations to test clip without live key

Test clip using keyed image

ISSUE) Latency of DV camera through Arkaos

MIDI NOTES

Prepare sequence with three keys to trigger alpha video, background video and live camera feed.

Give a few seconds of lead-in before notes

ISSUE) Hard-code live feed trigger or trigger manually

PERFORMANCE NOTES

Three markers - Close up, Mid shot, Wide shot

Treat the guide cues like a rhythm game (i.e. jump to the mark)

ISSUE) Show punter their image on preview?

Pro - punter can line up moves in line with background video, camera can be fixed

Cons - punter may be more comfortable not seeing own image, put faith in camera operator

NEXT STEPS

Sponsorship for Chromatte and Litering

Focus group (i.e. party) to test initial clips

What is the headbin?

The headbin is a personal publishing system (or "blog") that I use as a communications tool. Everyone who has access to this particular blog has signed a non-disclosure agreement and is helping to progress the "SANCTUARY" and "ten weeks in the head bin projects".

Starting on the home page, you can navigate the blog in a number of ways:

- search by keywords (enter them into the text field on the homepage and hit ENTER)

- browse by activity (“RECENT COMMENTS”)

- browse by reverse chronological order (“RECENT ENTRIES”)

- browse by category (via “BLOG SITE MAP” link)

You can also add new content:

- post a new Entry (via the "ADD AN ENTRY" link on the home page)

- post a comment (from the bottom of any existing Entry)

TIP: Click on the "HEADBIN" banner at the top of any page to return home.

May 10, 2004

Subtitling as machine-readable labelling?

Mark Robinson at subtitling company Independent Media Support (http://www.ims-media.com) gave the following useful information:

*Captioning/subtitling software is normally provided bespoke by companies such as SysMedia (http://www.sysmedia.com) and SofTel (http://www.softel.co.uk).

*Subtitling is normally farmed out to specialist companies. Only a few of the major broadcasters (BBC, etc.) keep it in house.

*It should be theoretically possible for the text normally sent to the monitor/screen to be diverted to another application for further processing (and so the text becomes the machine-readable label for each element), although he has never heard of it being done before.

Also spoke to John Boulton at Sysmedia, who explained the following:

*Subtitles are generated from the data not as text within a template, but as bitmaps. The larger part of the application is processing that conversion.

May 09, 2004

Regression testing

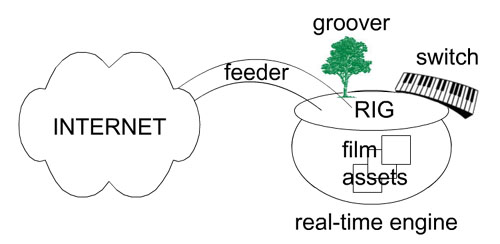

Reverse engineering the final goals of the project, we get a series of tests which need to constantly apply for the RIG:

GROOVER: Webcam support | Keyboard support | Controlpad support| Modifiable tracks

SWITCH: ReWire support

FEEDER: Upload MOD | Download MOD | Compile MOD

RIG formats

The creative framework defined by the RIG covers the following file types (formats):

text

2D image

3D model

animation

video

audio

May 07, 2004

Fun (and movie-related!) use of machine-readable text

http://www.cs.virginia.edu/oracle/

This site operates the game Six Degrees of Kevin Bacon (or any other actor, for that matter), by sourcing the archives of the IMDb (http://imdb.com). Follow the How it works link towards the bottom of the page for an explanation of the mechanics.

May 05, 2004

Universite tangente - A la Carte

Excellently dystopian political maps produced by universite tangente (the tangential university). Who needs fiction?

Map of the prison-industrial complex (2002)

Map of infowar/psychic war - marrying the mission to the market (2003)

Identification Systems / Surveillance and Databases Map (2002)

May 04, 2004

The Pot vs The Plate

Two analogies for how the RIG operates.

1) The Pot

In which the RIG acts as a planter, facilitating the growth of new offshoot products from a fertile story universe which can be delivered on-the-fly by a proprietary engine.

2) The Plate

In which the RIG acts as a glorified sandwich maker. For cinema, we have a feast. On console (or desktop) the creative framework encourages play through collaboratively built open sample applications - the healthy interactive option. For most participants, interaction will be strictly limited - grab a few favourite bits and make your own sandwich.